My XDC 2024 talk about VK_EXT_device_generated_commands

Some days ago I wrote about the new VK_EXT_device_generated_commands Vulkan extension that had just been made public. Soon after that, I presented a talk at XDC 2024 with a brief introduction to it. It’s a lightning talk that lasts just about 7 minutes and you can find the embedded video below, as well as the slides and the talk transcription if you prefer written formats.

Truth be told, the topic deserves a longer presentation, for sure. However, when I submitted my talk proposal for XDC I wasn’t sure if the extension was going to be public by the time XDC would take place. This meant I had two options: if I submitted a half-slot talk and the extension was not public, I needed to talk for 15 minutes about some general concepts and a couple of NVIDIA vendor-specific extensions: VK_NV_device_generated_commands and VK_NV_device_generated_commands_compute. That would be awkward so I went with a lighning talk where I could talk about those general concepts and, maybe, talk about some VK_EXT_device_generated_commands specifics if the extension was public, which is exactly what happened.

Fortunately, I will talk again about the extension at Vulkanised 2025. It will be a longer talk and I will cover the topic in more depth. See you in Cambridge in February and, for those not attending, stay tuned because Vulkanised talks are recorded and later uploaded to YouTube. I’ll post the link here and in social media once it’s available.

XDC 2024 recording

Talk slides and transcription

Hello, I’m Ricardo from Igalia and I’m going to talk about Device-Generated Commands in Vulkan. This is a new extension that was released a couple of weeks ago. I wrote CTS tests for it, I helped with the spec and I worked with some actual heros, some of them present in this room, that managed to get this implemented in a driver.

Device-Generated Commands is an extension that allows apps to go one step further in GPU-driven rendering because it makes it possible to write commands to a storage buffer from the GPU and later execute the contents of the buffer without needing to go through the CPU to record those commands, like you typically do by calling vkCmd functions working with regular command buffers.

It’s one step ahead of indirect draws and dispatches, and one step behind work graphs.

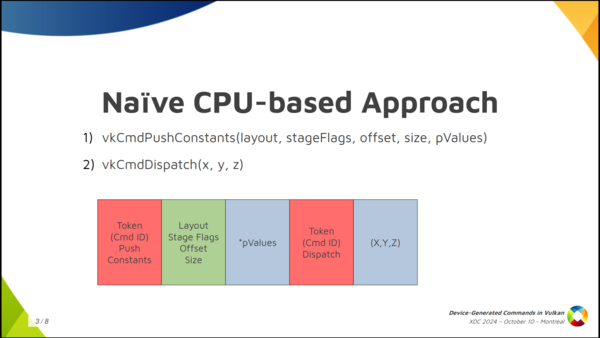

Getting away from Vulkan momentarily, if you want to store commands in a storage buffer there are many possible ways to do it. A naïve approach we can think of is creating the buffer as you see in the slide. We assign a number to each Vulkan command and store it in the buffer. Then, depending on the command, more or less data follows. For example, lets take the sequence of commands in the slide: (1) push constants followed by (2) dispatch. We can store a token number or command id or however you want to call it to indicate push constants, then we follow with meta-data about the command (which is the section in green color) containing the layout, stage flags, offset and size of the push contants. Finally, depending on the size, we store the push constant values, which is the first chunk of data in blue. For the dispatch it’s similar, only that it doesn’t need metadata because we only want the dispatch dimensions.

But this is not how GPUs work. A GPU would have a very hard time processing this. Also, Vulkan doesn’t work like this either. We want to make it possible to process things in parallel and provide as much information in advance as possible to the driver.

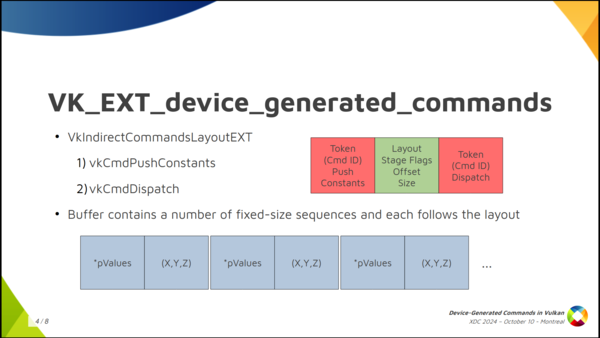

So in Vulkan things are different. The buffer will not contain an arbitrary sequence of commands where you don’t know which one comes next. What we do is to create an Indirect Commands Layout. This is the main concept. The layout is like a template for a short sequence of commands. We create this layout using the tokens and meta-data that we saw colored red and green in the previous slide.

We specify the layout we will use in advance and, in the buffer, we ony store the actual data for each command. The result is that the buffer containing commands (lets call it the DGC buffer) is divided into small chunks, called sequences in the spec, and the buffer can contain many such sequences, but all of them follow the layout we specified in advance.

In the example, we have push constant values of a known size followed by the dispatch dimensions. Push constant values, dispatch. Push constant values, dispatch. Etc.

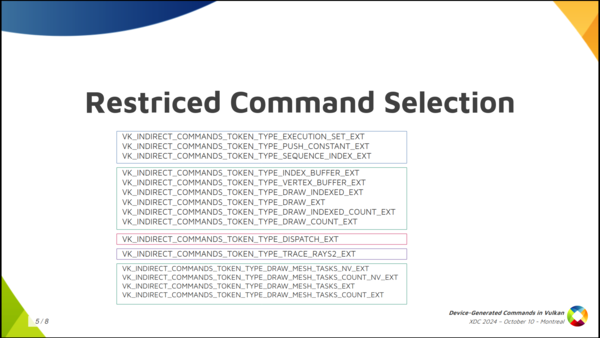

The second thing Vulkan does is to severely limit the selection of available commands. You can’t just start render passes or bind descriptor sets or do anything you can do in a regular command buffer. You can only do a few things, and they’re all in this slide. There’s general stuff like push contants, stuff related to graphics like draw commands and binding vertex and index buffers, and stuff to dispatch compute or ray tracing work. That’s it.

Moreover, each layout must have one token that dispatches work (draw, compute, trace rays) but you can only have one and it must be the last one in the layout.

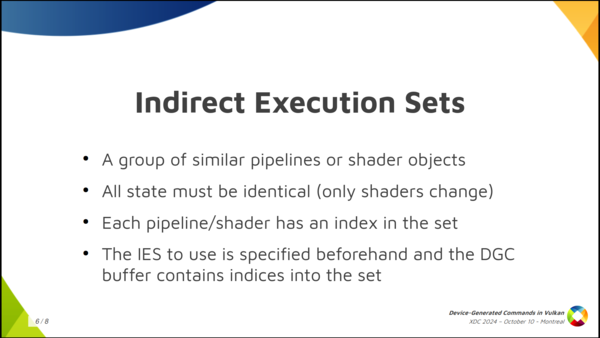

Something that’s optional (not every implementation is going to support this) is being able to switch pipelines or shaders on the fly for each sequence.

Summing up, in implementations that allow you to do it, you have to create something new called Indirect Execution Sets, which are groups or arrays of pipelines that are more or less identical in state and, basically, only differ in the shaders they include.

Inside each set, each pipeline gets an index and you can change the pipeline used for each sequence by (1) specifying the Execution Set in advance (2) using an execution set token in the layout, and (3) storing a pipeline index in the DGC buffer as the token data.

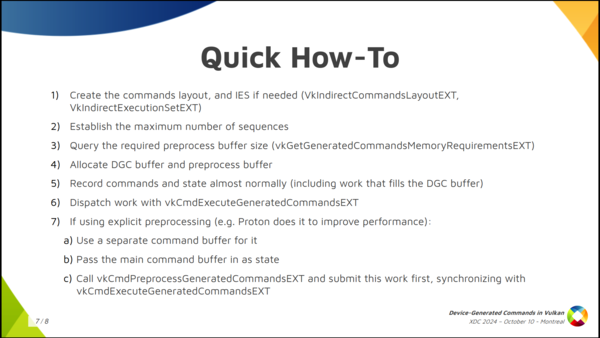

The summary of how to use it would be:

First, create the commands layout and, optionally, create the indirect execution set if you’ll switch pipelines and the driver supports that.

Then, get a rough idea of the maximum number of sequences that you’ll run in a single batch.

With that, create the DGC buffer, query the required preprocess buffer size, which is an auxiliar buffer used by some implementations, and allocate both.

Then, you record the regular command buffer normally and specify the state you’ll use for DGC. This also includes some commands that dispatch work that fills the DGC buffer somehow.

Finally, you dispatch indirect work by calling vkCmdExecuteGeneratedCommandsEXT. Note you need a barrier to synchronize previous writes to the DGC buffer with reads from it.

You can also do explicit preprocessing but I won’t go into detail here.

That’s it. Thank for watching, thanks Valve for funding a big chunk of the work involved in shipping this, and thanks to everyone who contributed!